Contents

Summary

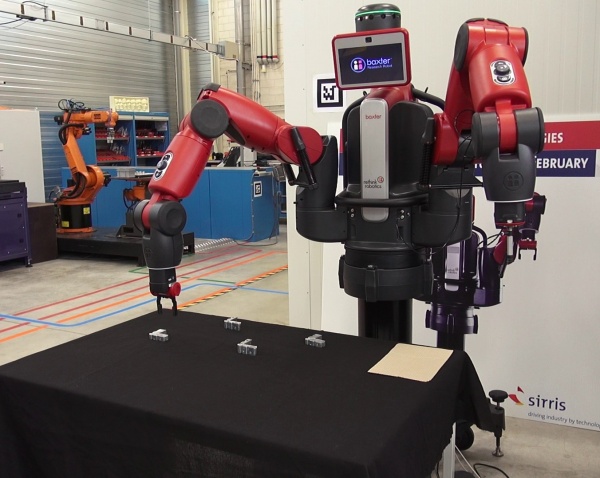

Baxter Pick and Learn is a proof of concept for an application where an operator teach a sorting task to Baxter. It uses a shape recognition algorithm to identify similar shapes and place them to a location previously demonstrated by the operator.

It was developped for a MA1 project at the VUB/ULB in collaboration with Sirris. As the objective of the project was not to develop a reliable implementation but research the possibilities offered by Baxter, this is only meant as a proof of concept and still needs a lot of improvements.

Documentation

The source code is available on github: haxelion/baxter_pickandlearn

A demonstration video can be seen on youtube: Baxter Pick And Learn

For more informations about the implementation, section 5 and 6 of the project report can be consulted.

Installation

It uses the Baxter SDK 0.7 and above, but has only been tested on version 0.7. It does build on version 1.0 and should theoritically work.

$ cd ~/ros_ws/src

$ git clone 'https://github.com/haxelion/baxter_pickandlearn.git'

$ cd ~/ros_ws

$ catkin_make

$ rosrun baxter_pickandlearn pickandlearn

Known Limitations

Shape Orientation

The implementation doesn't take the shape orientation into account. This means that the shapes need to always be in the same orientation. To solve that problem the orientation could be easily computed using the geometrical moments of the shape.

Shape Recognition

The algorithm uses Hu moments to classify shapes. Although it produces good results it also produces disturbing false positives (like a square and a circle). It was simply used because OpenCV provides an implementation but a better shape classifier should be used for better results.

Movement Speed And Precision

The overall task is performed slowly and imprecisely, not because of Baxter limitations but because the arm controller implementation is too simple. An invert kinematic solver dedicated to the task with a velocity control of the arm should be used instead.